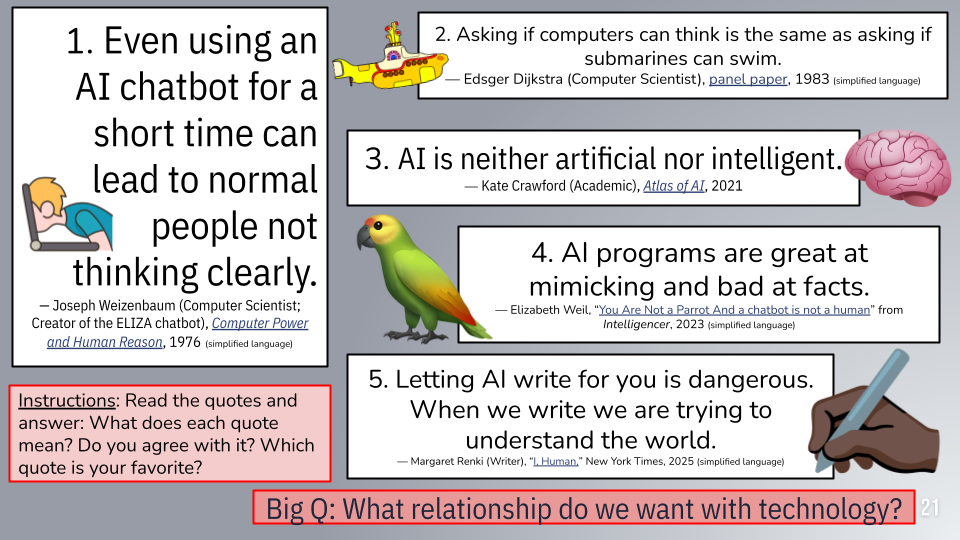

Critical AI Quotes Activity

Running the activity in the classroom

The AI quotes activity was created as an introductory activity to encourage people to consider critical interpretations of AI that go beyond simply seeing AI as tools that always result in social progress. Educators can use all the quotes in full, choose some quotes, or use simplified quotes. The Google slides linked below are intended to be printed out and posted on classroom walls for a “gallery walk,” but teachers can adapt the activity for their contexts.

We have continued adding quotes to this page as our community shares new ones with us. Only the quotes we have used in the classroom appear in the slides, but of course, it is easy to modify the slides to include different quotes. Please continue to check back for updates.

Creators: Dan Krutka and Marie Heath

What are different ways to think about AI?

AI quotes activity instructions: Read the quotes and consider the following questions:

What do you believe each quote means?

How does the time and place from which it originates effect its meaning for today?

Which quote most resonates with you—for better or worse?

Jot down your responses and prepare to discuss them with a partner or small groups.

The Analytical Engine has no pretensions whatever to originate anything. It can do whatever we know how to order it to perform. It can follow analysis; but it has no power of anticipating any analytical relations or truths.

— Ada Lovelace (English mathematician), “Notes on the Analytical Engine,” 1843

What I had not realized is that extremely short exposures to a relatively simple computer program could induce powerful delusional thinking in quite normal people.

— Joseph Weizenbaum (Computer Scientist; Creator of the ELIZA chatbot), Computer Power and Human Reason, 1976

The question whether computers can think… is just as relevant and just as meaningful as the question whether submarines can swim.

— Edsger Dijkstra (Computer Scientist), panel paper, 1983

…Success in creating AI could be the biggest event in the history of our civilisation. But it could also be the last, unless we learn how to avoid the risks. Alongside the benefits, AI will also bring dangers, like powerful autonomous weapons, or new ways for the few to oppress the many.

— Professor Stephen Hawking’s speech at the launch of the Leverhulme Centre for the Future of Intelligence, October 19, 2016

AI is good at describing the world as it is today with all of its biases, but it does not know how the world should be.

— Joanne Chen (General Partner, Foundation Capital, Computer Science Engineer) SXSW, 2018

No statistical prediction can or will ever be 100 percent accurate—because human beings are not and never will be statistics.

Meredith Broussard, Artificial unintelligence: How computers misunderstand the world, 2018, p. 118

I'm not worried about machines taking over the world, I'm worried about groupthink, insularity, and arrogance in the AI community.

— Timnit Gebru (Computer Scientist) as quoted in “‘There was all sorts of toxic behaviour’: Timnit Gebru on her sacking by Google, AI’s dangers and big tech’s biases“, The Guardian, 2023

AI will not solve poverty, because the conditions that lead to societies that pursue profit over people are not technical. AI will not solve discrimination... the cultural patterns... are not technical.

— Joy Buolamwini (Computer Scientist & Digital Activist), Unmasking AI: My Mission to Protect What Is Human in a World of Machines, 2023, p. xix

…AI is neither artificial nor intelligent. Rather, artificial intelligence is both embodied and material, made from natural resources, fuel, human labor, infrastructures, logistics, histories, and classifications. AI systems are not autonomous, rational, or able to discern anything without extensive, computationally intensive training with large datasets or predefined rules and rewards. In fact, artificial intelligence as we know it depends entirely on a much wider set of political and social structures. And due to the capital required to build AI at scale and the ways of seeing that it optimizes AI systems are ultimately designed to serve existing dominant interests. In this sense, artificial intelligence is a registry of power.

— Kate Crawford (Academic), Atlas of AI: Power, Politics, and the Planetary Costs of Artificial Intelligence, 2021

[Large language models are] great at mimicry and bad at facts… This makes LLMs beguiling, amoral, and the Platonic ideal of the bullshitter, as philosopher Harry Frankfurt, author of On Bullshit, defined the term. Bullshitters, Frankfurt argued, are worse than liars. They don’t care whether something is true or false. They care only about rhetorical power — if a listener or reader is persuaded.

— Elizabeth Weil, “You Are Not a Parrot And a chatbot is not a human” from Intelligencer, March 1, 2023

Currently, too many comparisons between “AI” and Magic serve to either hype up what “AI” can do, or wave away the things “AI” companies and designers want hidden from the public— indicating a fundamental misunderstanding of both magic and “AI”....But the present magic of “AI” is performed on us without our input, without our knowledge, and without our consent. There’s another word for that kind of magic; binding, subjugating magic performed on you against your will is called a curse.

— Damien Patrick Williams (Philosophy & Data Science Professor), “Any sufficiently transparent magic . . .” in America Religion [journal], 2023, pp. 107-108

We need people in our lives, not the simulation of people.

— L.M. Sacassas ( professor), “Embracing Sub-Optimal Relationships” from The Convivial Society [blog], 2024, para. 13

Our tools are cultural not merely technological, so while many people want to frame the emergence of generative AI as simply the latest development in the long history of computers, of artificial intelligence -- transformers, neural networks, tokens, and so on -- we have to remember that what emerges is not just a matter of engineering. It's a matter of markets and politics and ideology and culture.

— Audrey Watters ( Tech Critic), “AI Grief Observed” from Second Breakfast [blog], 2025, para. 16

Letting a robot structure your argument, or flatten your style by removing the quirky elements, is dangerous. It’s a streamlined way to flatten the human mind, to homogenize human thought. We know who we are, at least in part, by finding the words — messy, imprecise, unexpected — to tell others, and ourselves, how we see the world. The world which no one else sees in exactly that way.

— Margaret Renki (Writer), “I, Human,” New York Times, 2025

But A.I. is a parasite. It attaches itself to a robust learning ecosystem and speeds up some parts of the decision process. The parasite and the host can peacefully coexist as long as the parasite does not starve its host. The political problem with A.I.’s hype is that its most compelling use case is starving the host — fewer teachers, fewer degrees, fewer workers, fewer healthy information environments.

— Tressie McMillan Cottom (Professor of Sociology, Technology, & Society), “The Tech Fantasy That Powers A.I. Is Running on Fumes ,” New York Times, 2025

This is not to say that generative AI cannot be useful, but as opposed to seeing it as a “co-intelligence” or research partner, we should instead see generative AI as something closer to this:

A Lab will bring us anything: a toy, a dead squirrel, a living squirrel, a rock, a stick, a gold bar. They are friendly, indefatigable and non-discerning.

— John Warner (Education writer), “The Limits of AI Research for Real Writers,” The Biblioracle Recommends [blog], 2025

Want even more quotes?

Community member Daniel Peck created her own AI Quote Activity to her effort to answer the question, “How can I encourage educators and their students to engage in Critical AI Literacies?” Access using the links below and learn more in our blog post on the activity. We have integrated several of her quotes into our activity above.