Data is Power: Teaching Critical AI Literacy in K-12

Civics of Technology Announcements

Curriculum Crowdsourcing: One of the most popular activities in our Curriculum is our Critical Tech Quote Activity because the lessons offers a quick way to introduce a range of critical perspectives to students. We have decided to create a Critical AI Quote Activity and we want your help. Please share your favorite quotes about AI with the author and source via our Contact page. We will collect them, write the lesson, and share when it is ready!

December Book Club: Michelle Levesly is leading a book club of Resisting AI by Dan McQuillan. Join us to discuss at 10:30 AM EST on Wednesday, December 3rd, 2025. Head to the events page to register!

Editors’ note: Data is Power is seeking applications from schools, districts, and classrooms for the 2025-26 school year. Learn more and apply by December 8th, 2025 (it’s a short application). Read more about the work of Data is Power in today’s blog!

By Evan Shieh (Young Data Scientists League)

Ever since the launch of ChatGPT, young people have been bombarded with warnings that their jobs are under threat from advanced, super-human AI models that don’t need to eat, drink, or sleep. Headlines like “ChatGPT could be smarter than your professor in the next 2 years” and “Even the scientists who build AI can’t tell you how it works” suggest that the AI industry is for the select few, using language that disproportionately discourages minoritized learners and students who don’t consider themselves to be “STEM people”.

While AI fearmongering may invoke anxiety among our youth, students are far from powerless. Like their adult counterparts, they are eager to learn complex ideas and apply them to address issues in their communities. Especially when it comes to AI ethics and justice, youth are uniquely situated to identify and challenge AI harms that are often overlooked by “AI experts”. At YDSL, we learned that providing K-12 students and educators with introductory AI ethics training not only encouraged them to analyze deeper ethical questions, but also empowered them to produce cutting-edge critical AI research using content knowledge already taught in K-12 classrooms. Youth are not just “our future”; they are a force to be reckoned with today.

The “Instruction Manual” Era of AI Literacy

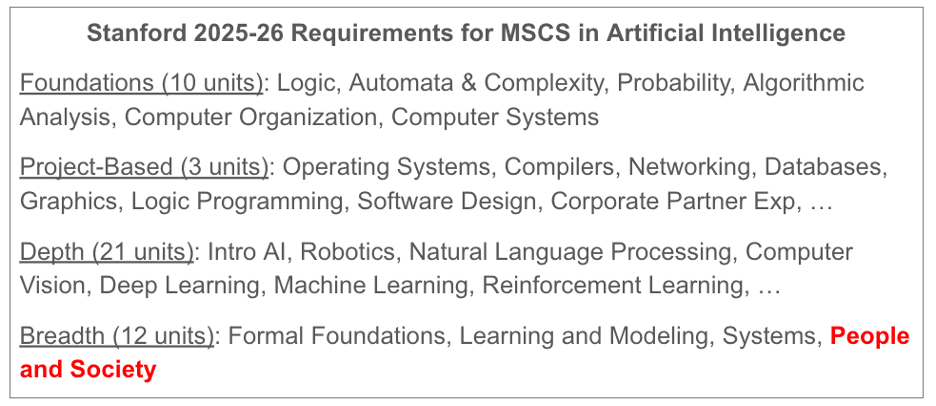

Why does the AI industry - dominated by highly paid PhD holders from prestigious institutions - continue to struggle with ethical harms affecting learners and educators? As with many societal issues, this question begs us to look at what forms of knowing are being (de)valued. In higher ed, most STEM classrooms have now adapted to generative AI largely by teaching its technical foundations or how to “use AI responsibly”. Despite this lip service, most educational pathways to the tech industry have largely remained unchanged over the last decade (see Figure 1). Ethics courses continue to be sidelined and siloed in favor of technical training, theoretical foundations, and how to train and use AI models. By marginalizing AI’s sociotechnical dimensions (such as real-world model evaluation), the dominant paradigm in AI literacy essentially teaches a glorified instruction manual dressed up as a pedagogy-shaped object.

Fig 1. Stanford’s 2025-26 Requirements for a CS Master’s specialization in AI (source). Nearly all course tracks have remained the same in the last decade. The subrequirement containing AI ethics courses is People and Society; however, this requirement can be subverted by taking applied geometry courses like CS148 (Computer Graphics) in lieu of AI ethics. Some courses embed AI ethics units at the discretion of individual instructors.

Elevating Community-Led AI Literacies

Building an AI literacy that serves humanity requires decolonizing the prototypical AI expert. Last year, I conducted over sixty interviews with urban K-12 students, educators, and justice practitioners to understand how they approach AI ethics. Their perspectives were deep and far-ranging.

I spoke with students in Chicago who are concerned with how AI affects student mental health, learning, and career development. I met with a teacher in Oakland who taught elementary school students how AI intersects with environmental racism. The nuanced moral frameworks I heard were personal, diverse, and often in tension with each other. I spoke with teachers in Miami who were thinking of using AI as a potential tool to enrich their own, historically underserved communities. But when it came to the topic of their students using AI, they raised concerns of algorithmic bias and capitalist Big Tech agendas further exacerbating inequalities.

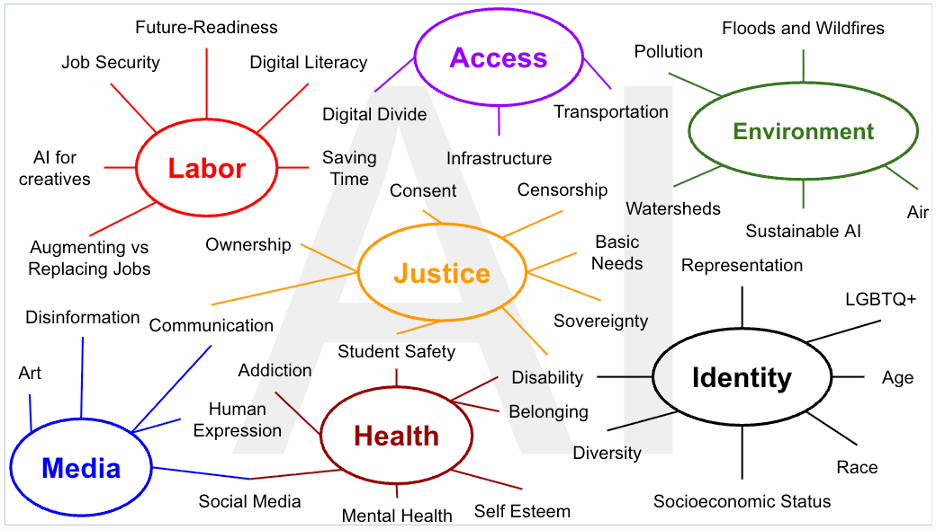

Fig 2. Participatory concept map of AI ethics literacy, based on 64 interviews conducted with K-12 students, educators, and AI justice practitioners in the YDSL community.

I learned that K-12 students and educators are not only eager to shape the AI field, but are uniquely capable of doing so. “AI is going to be one of those things where, as a society, we have a responsibility to make sure everyone has access in multiple ways”, said Milton Johnson, an experienced educator who teaches the physics of engineering at Bioscience High School in Phoenix.

Empowering everyone means grounding AI literacy in multiple disciplines, including both STEM and the humanities (see Figure 2). “AI is not just for computer scientists who are coding and finding datasets. There are individuals who need to be looking at the ethics”, said Karren Boatner, who teaches English literature at Whitney M. Young Magnet High School in Chicago.

Data is Power

These conversations pointed towards an urgent need to provide educators with instructional resources to teach critical AI literacy. We partnered with a subset of our interviewees to co-design Data is Power, a K-12 AI ethics program consisting of four learning modules that cover topics identified by our communities (see Figure 2). Each module teaches an aspect of critical AI research by applying K-12 learning standards across math, ELA, science, and CS.

Each lesson centers the stories of diverse students and educators who have shaped the field of AI ethics and data justice. We explore algorithmic bias in facial recognition technologies through a classroom activity that extends Dr. Joy Buolamwini’s graduate thesis research Gender Shades. Our students learn to audit AI technologies by collecting data from an anti-surveillance fashion exercise built by student educators at YR Media in Oakland. They develop frameworks for the labor ethics of AI’s supply chain by following the wage theft research of educator-turned-researcher Adrienne Williams. Finally, following in the footsteps of critical student research activists like Gwendolyn Warren, our students lead their own original research studies on community-relevant topics.

For their final project, Karren’s students applied the knowledge they learned in class to research how AI might help or hurt the environment, from technological advancements in green energy to how data centers might increase water consumption and exacerbate environmental racism. According to Karren, studying AI ethics through a critical lens helped her students learn to distinguish between rigorous research and AI propaganda, a skill that she believes will be useful for civic participation and career-readiness in the future.

“One of the most significant shifts I observed was in student engagement... students who are typically hesitant to share began to contribute more regularly, especially when discussing social justice, identity, and technology.”

- Data is Power STEM Educator

Piloting Data is Power taught us that critical AI literacy can be a rewarding experience for both students and educators. In June, two of Karren’s students presented at one of the world’s largest AI ethics conferences, alongside other students from Data is Power. Anecdotally, empowering students to contribute to the broader societal discussion on AI ethics is also a great antidote for AI anxiety. We’re honored to continue our work this school year in collaboration with Civics of Technology through partnerships such as NYC Public Schools’ EEAI fellowship.

Interested in bringing critical AI literacy to your classroom? Data is Power is seeking applications for the 2025-26 school year. Learn more and apply by December 8th, 2025 (it’s a short application). We’d love to hear from you!